Neural nets have been used to generate some very interesting results in Creative AI applications, such as face synthesis, music generation, and procedural modeling. The question here is, how can they be used with a classic game, Rollercoaster Tycoon 2?

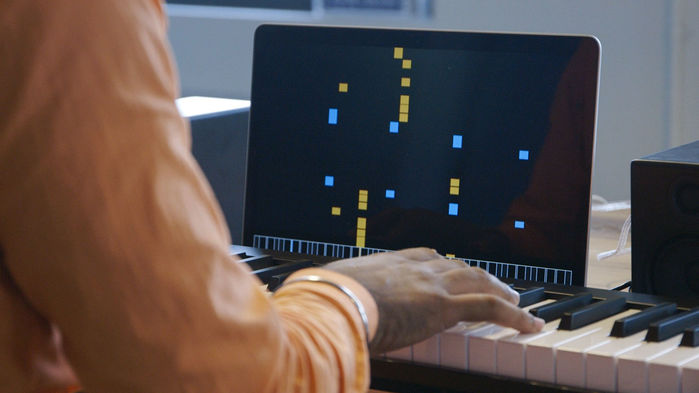

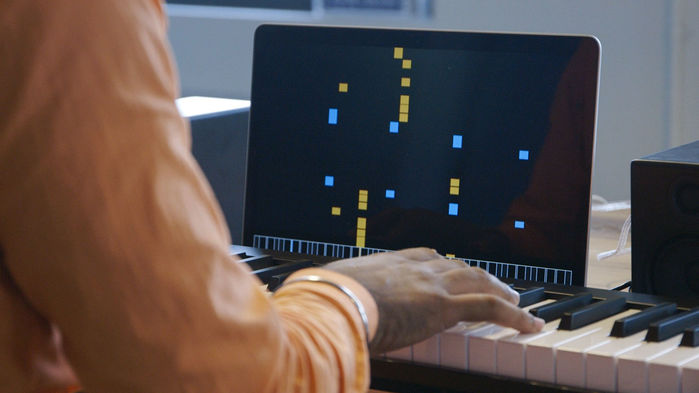

Word prediction will be useful to review here. To do word prediction, a sequence of words must first be mapped to a sequence of numbers. Then, this sequence of numbers is fed to a recurrent neural net to compute the likelihood of possible next entries in the sequence. These possibilities are ranked and converted back to words, then shown to you!

A sequence of RCT2 track pieces makes up a roller coaster, much like a sequence of words makes up a sentence. So, like in word prediction, a sequence of track pieces can be mapped to a sequence of numbers and fed to a recurrent neural net to compute the likelihood of potential next track pieces.

By taking the top prediction, adding it to the track, and feeding this new track back into the recurrent network, a complete roller coaster can be generated! So, let's see exactly how I did just this.

All code is available here. These files will be referred to throughout this section. Everything is written in python.

To implement this, the first thing we need is track data. Fortunately, RCT2 tracks can be saved and shared as .TD6 files, a run-length encoding format. I collected wooden roller coaster track files from two sources: those that come with the game, and those shared by users at rctgo.com.

The problem is simplified by using only wooden roller coasters. This is because wooden coasters don’t contain complex pieces like loops, twists, vertical drops, etc. The utility script ‘filter.py’ is used to filter out wooden tracks (=34).

These .TD6 track files contain metadata about the track such as type, excitement/intensity/nausea ratings, scenery, etc., as well as the track itself. The track contains 2-byte track segments. The first byte corresponds to the track pieces (for example, ‘ELEM FLAT’ or ‘ELEM LEFT QUARTER TURN 5 TILES 25 DEG UP’), and the second byte contains bit-wise metadata such as color, chain lifts, and braking speed. More details can be found at RCTTechDepot.

For this project, we only really need to pay attention to the bytes that corresponding to the actual track pieces. The script ‘track_extractor.py’ attempts to use some heuristics to extract these pieces. There are many fragments and flags that I don’t really understand in the encoding. The script tries to account for these, but still fails for about half of coaster files. This could certainly be improved with some more investigation into these run-length encodings. The extracted tracks used in this project can be found in the ‘extracted_tracks_wood’ directory.

Now for the neural network. This consists of ‘preprocess.py’, ‘nn_train.py’, and ‘nn_build.py’. This implementation uses the Tensorflow library.

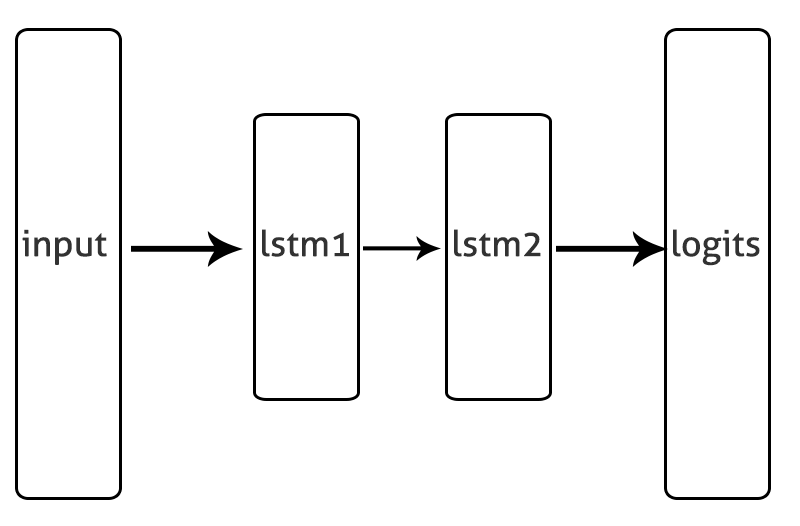

The neural network structure, as shown in the diagram above, consists of [batch_size x window_size] sequences of track piece indices that are converted to one-hot vectors. These are fed to two sequential recurrent LSTM layers, from which a [batch_size x window_size x vocab_size] set of logits is calculated. In training, sparse softmax cross entropy loss across these logits are minimized. In building, the last entry of the first batch (logits[0][-1]) consists of a [vocab_size]-sized vector that can be treated as a probability distribution for the next track piece.

To prepare the data to be fed into the neural network, the track pieces must be indexed and arranged into a [batch_size x window_size] matrix. This is handled in ‘preprocess.py’.

Some additional problem-specific augmentations are used. First, ‘covered’ track pieces are mapped to their corresponding regular track pieces, an easy simplification with only cosmetic ramifications.

Also, some constraints are hard-coded into the building script. These are based on constraints in the game for which track piece can come after another. For example, ‘ELEM FLAT’ can’t come after ‘ELEM 25 DEG UP’ - an ‘ELEM 25 DEG UP TO FLAT’ would need to come in between. A dictionary of these constraints can be found in ‘segments.py’. If a suggested next element isn’t found in this dictionary of possible sequential pieces, it’s ignored.

Finally, tracks are padded with [window_size] zeros at the beginning, to give clear indications of where tracks start and end, and so a matrix of zeros can be used as initial input in building.

The final system is a simple interactive console application, embedded directly into ‘nn_build.py’.

The system provides a suggestion for the next track piece.

The user can accept this suggestion by pressing enter. This new piece is added to the list of segments, which is printed after each new entry, as shown.

If a user doesn’t like the next suggestion, they can type ‘n’ to cycle through the top five suggestions, accepting whichever they prefer.

Finally, if a user doesn’t like any of these suggestions, they can enter their own next track piece.

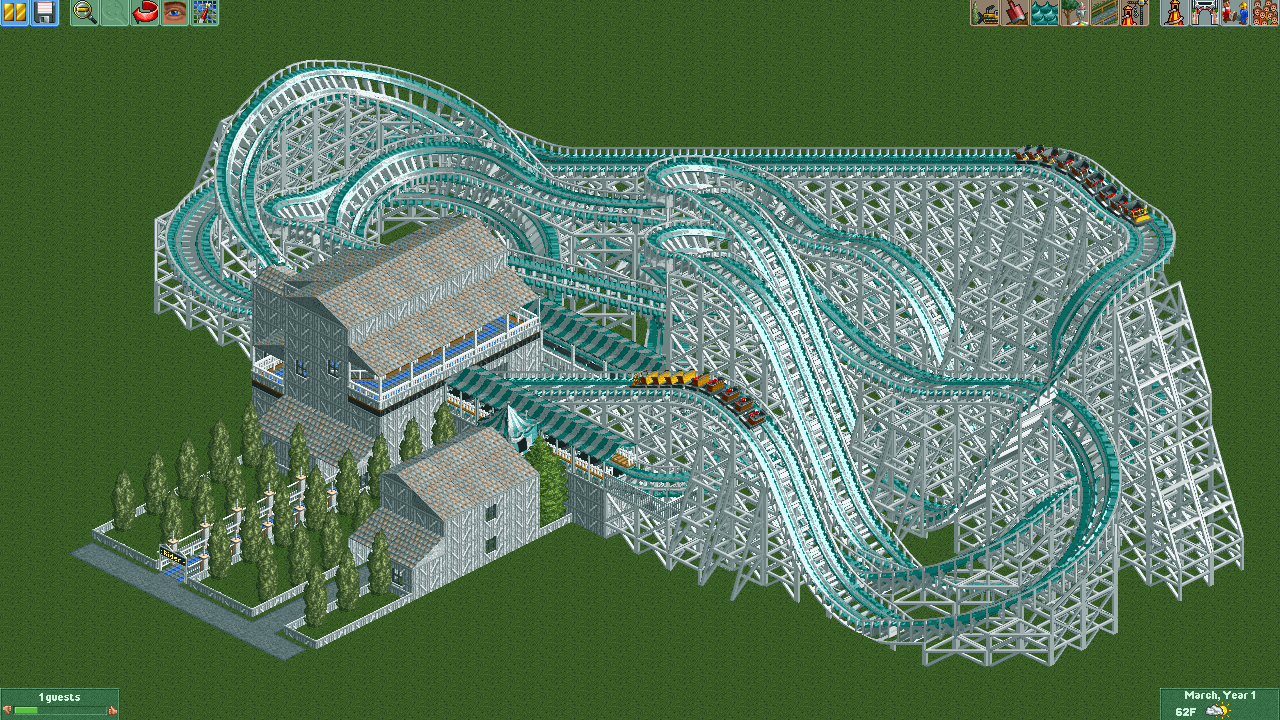

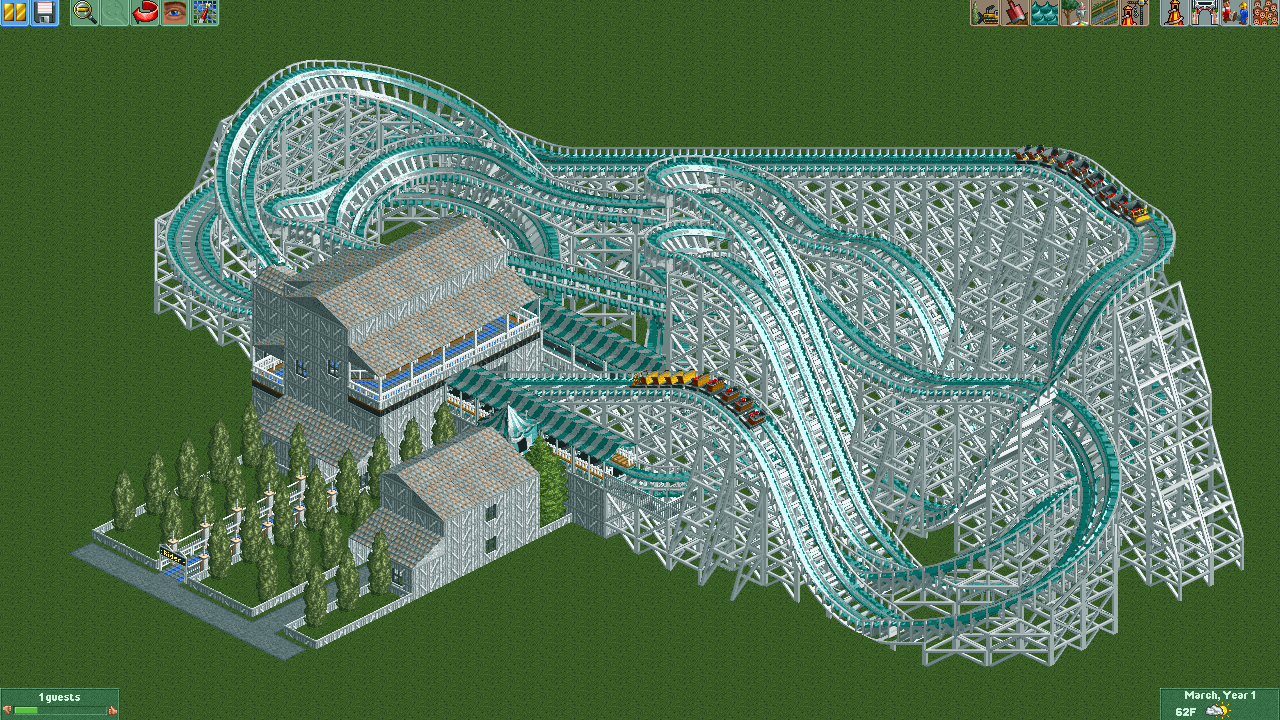

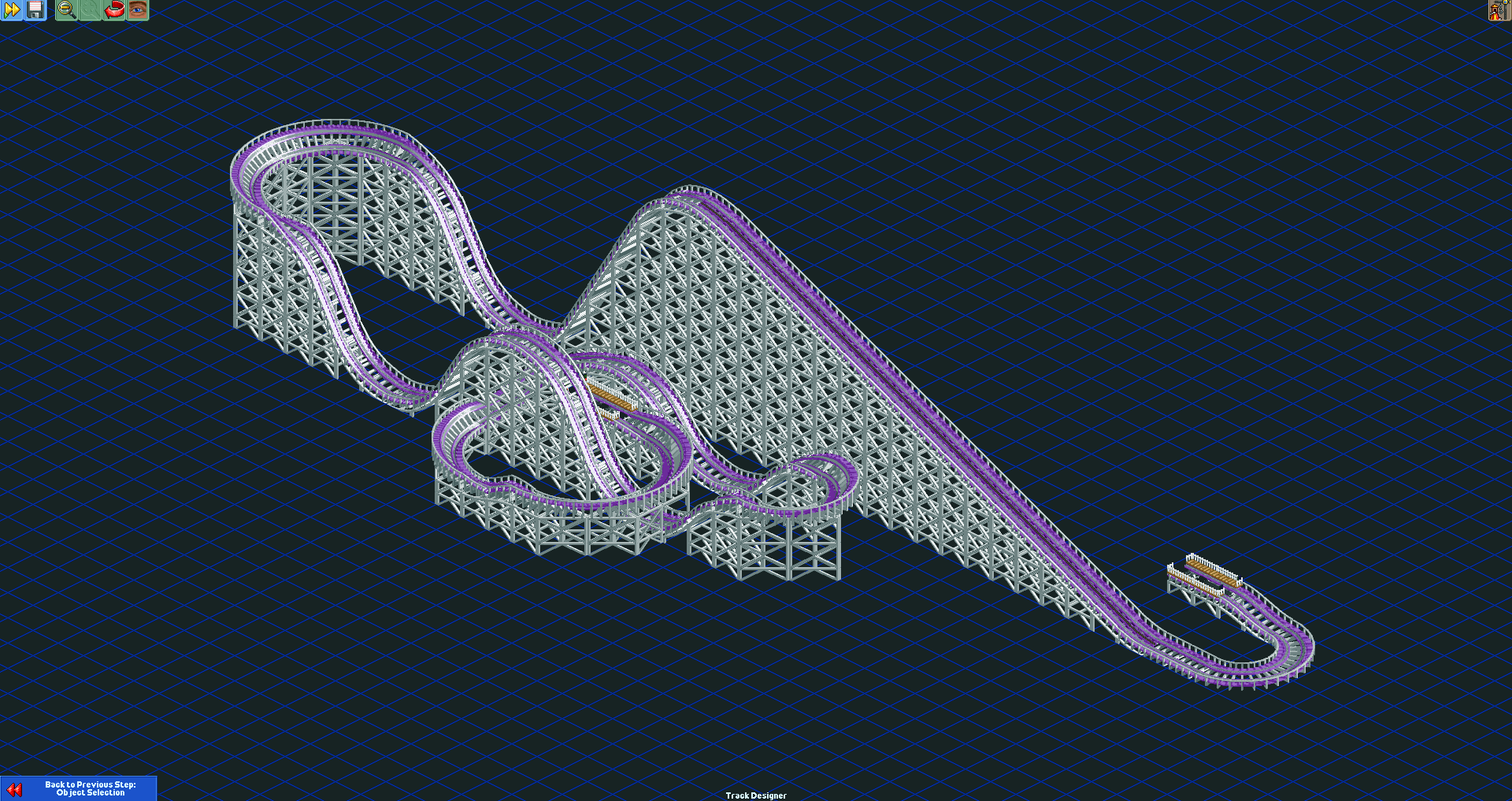

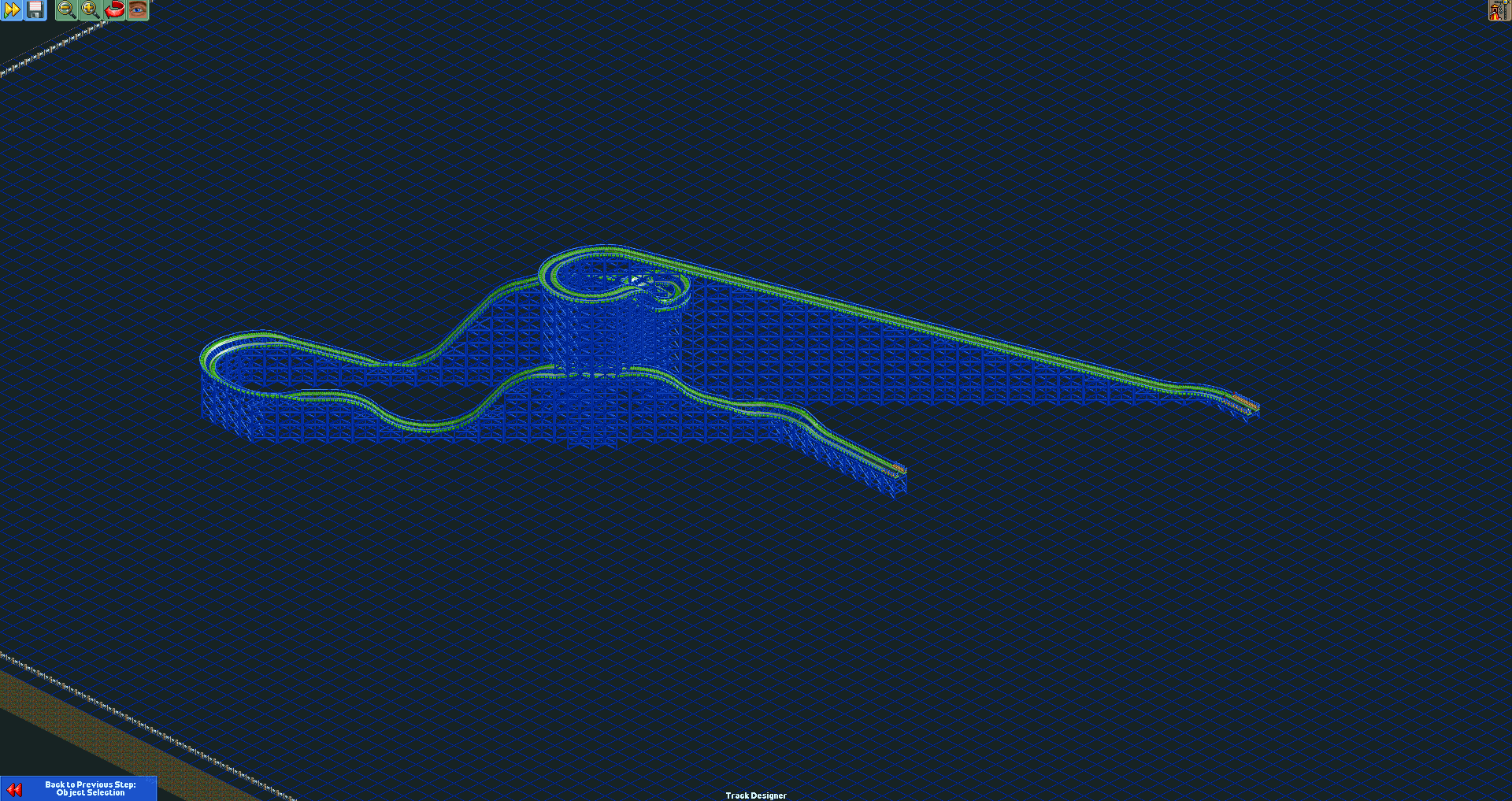

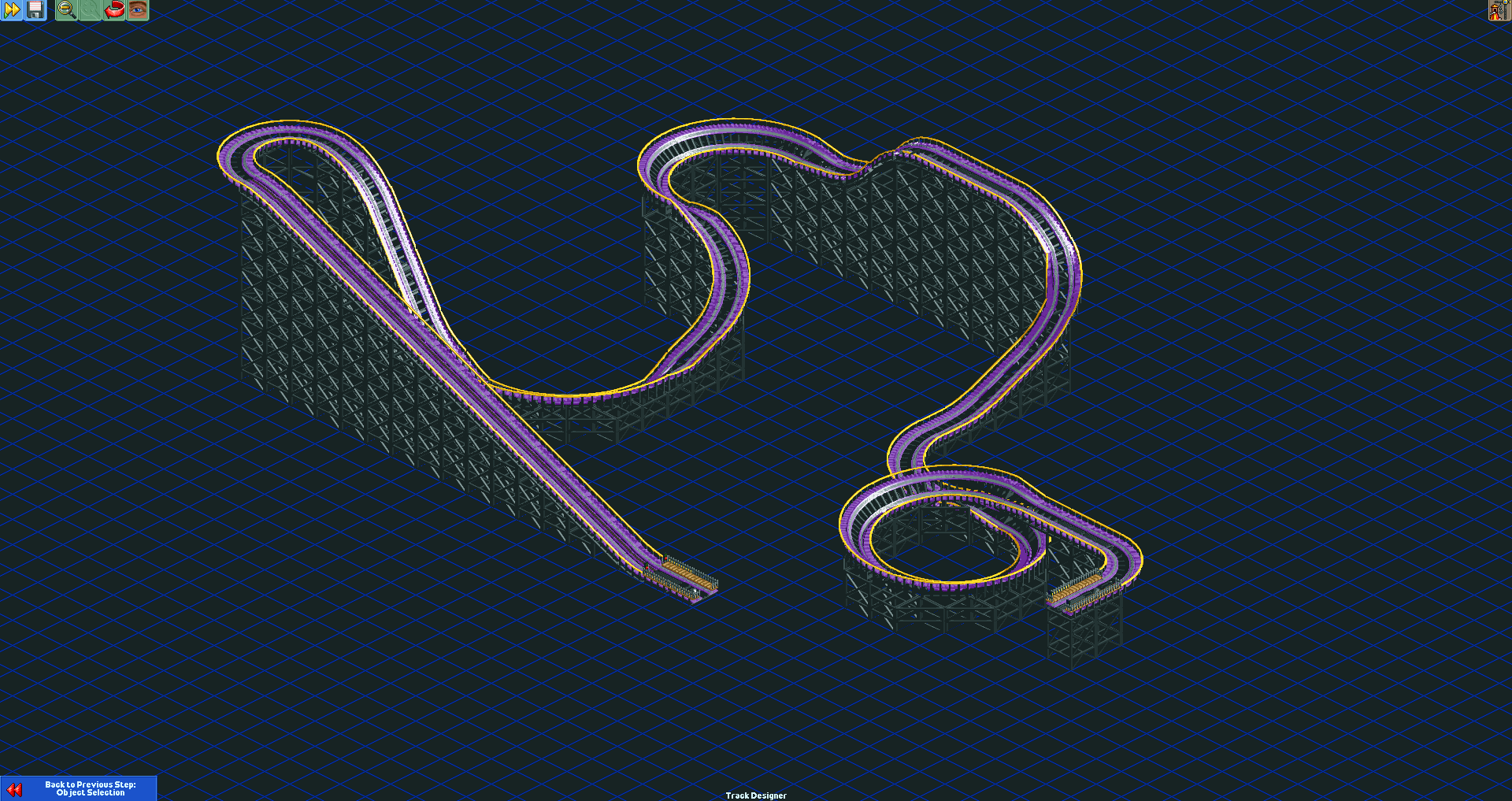

Here are some examples of roller coasters that were generated with this system:

Obviously, this is just a console application, with no integration in the game, However, it would be possible to integrate this directly into the open source implementation of RCT2.

Additionally, there are some limitations caused by the fact that this system is purely data-driven. Some of these are listed below:

One idea for how to remedy these problems is to mix this approach with reinforcement learning. The latest track piece can be a treated as a state, and building the next piece as an action. Things like closing the circuit can be treated as rewards, collisions as failures. It would be very interesting to see how this would look!